Activity 16: Support Vector Machines

For this activity [1, 2], I used the extracted fruit color features in

which is a quadratic and hence, convex problem. Here, CVXPY

library [3], we can setup the above objective and constraint as-is and directly obtain

so we can plot the margins on opposite sides of the decision line using trigonometric identities:

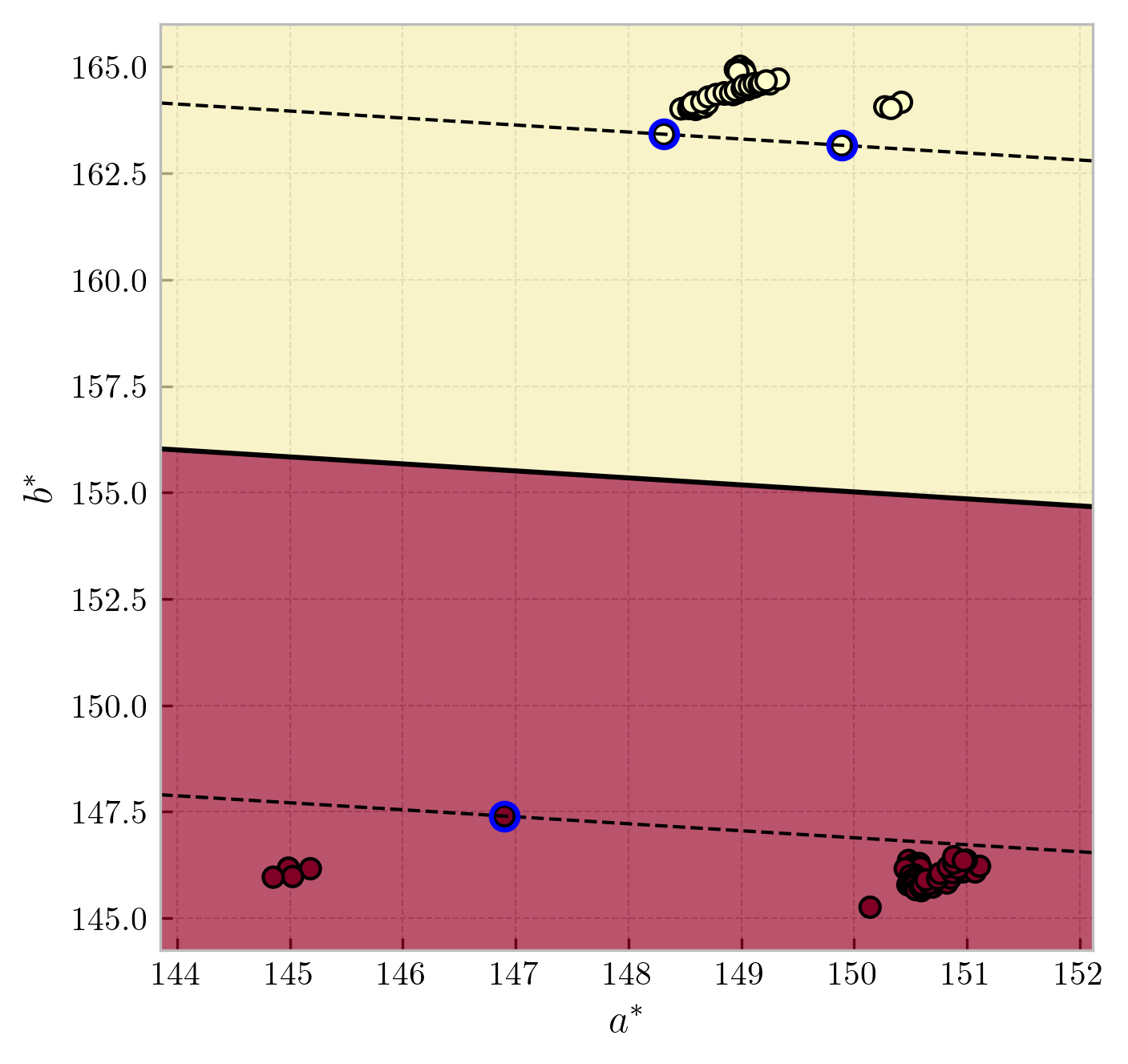

Figure 1 shows the optimum decision boundary with maximized margins in the

Figure 1: Decision boundary for oranges and apples data in -1 (oranges), while the red region corresponds to +1 (apples). The vectors highlighted in

blue are the support vectors. The solid line is the decision boundary, while the dashed lines are the margins.

References

- M. N. Soriano, A16 - Support vector machines (2019).

- O. Veksler, CS434a/541a: Pattern recognition - lecture 11 (n.d.).

S. Diamond, and S. Boyd, CVXPY: A Python-embedded modeling language for convex optimization, Journal of Machine Learning Research 17(83), 1-5 (2016).