Activity 13: Perceptron

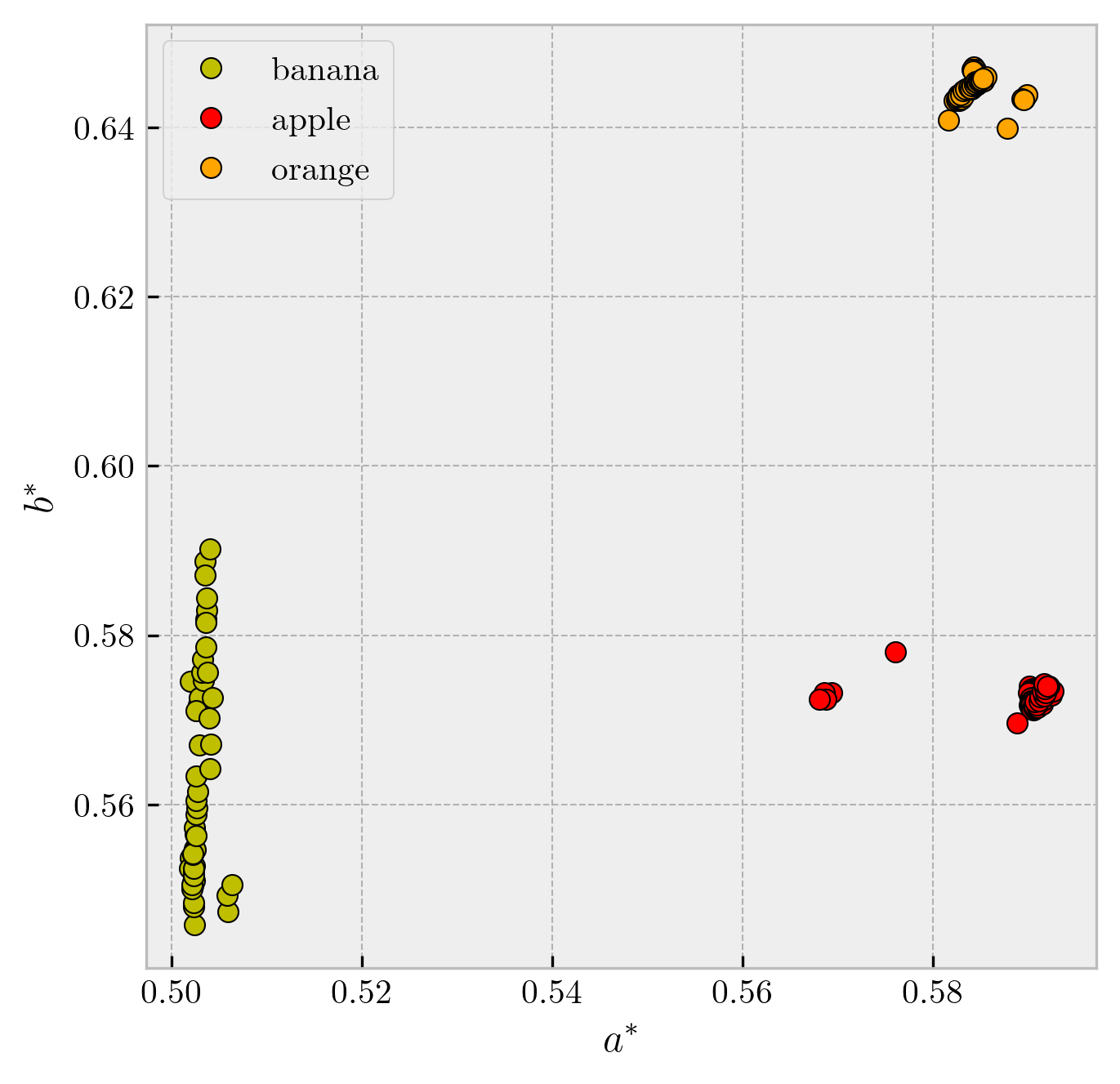

For this activity [1], I used the features extracted from the fruits (50 each of apples, mangoes,

bananas) from the previous activity. The feature space in

where

Figure 1: Feature space in

Figure 2:

References

- M. N. Soriano, A13 - Perceptron (2019).