Activity 15: Expectation Maximization

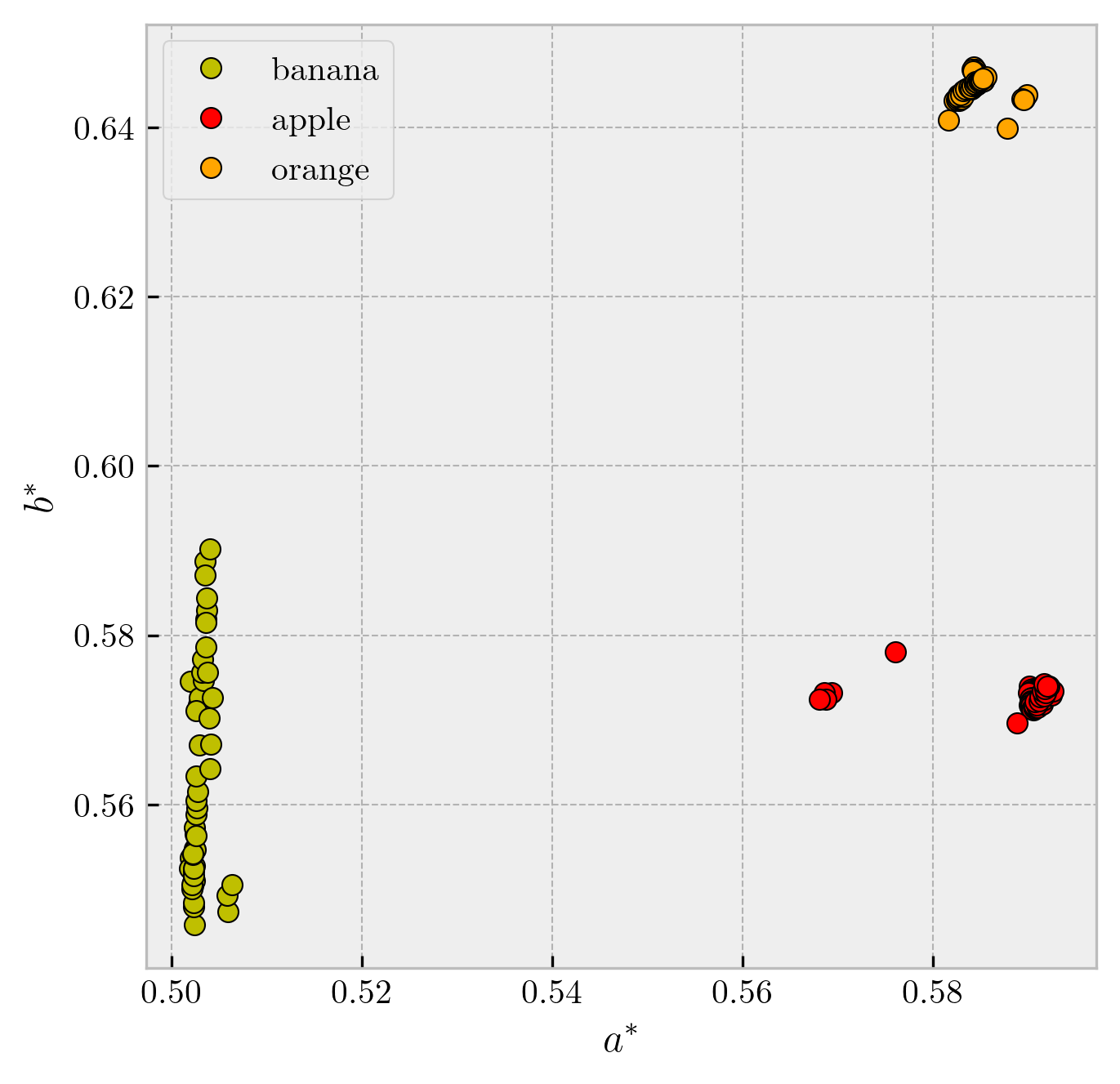

For this activity [1], I used the separated banana, apple, and orange feature data from a

previous activity. The fruits form clear clusters in

Figure 1:

Since we are working only with two dimensions (two features), we assume a 2D Gaussian distribution, given by

In the interest of computational efficiency, we define an intermediate matrix

which are used throughout one entire iteration, in order to avoid redundant calculation of exponentials and matrix inversions. We then iterate with the update rules

until the log-likelihood goes above some pre-set value. The log-likelihood is given by

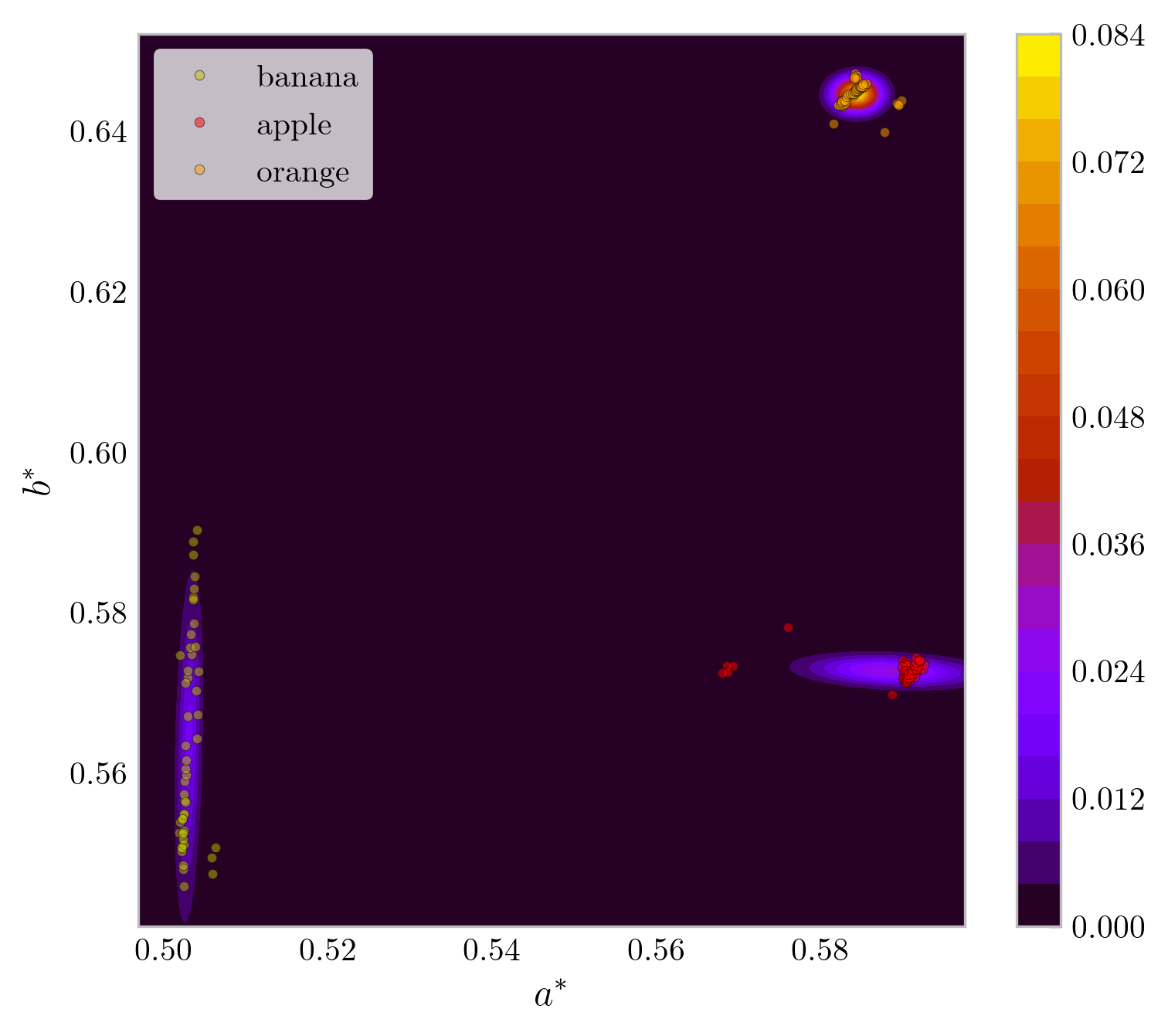

Figure 1: Estimated PDF in the

References

- M. N. Soriano, A15 - Expectation maximization (2019).

- C. Chan, and J. McCarthy, Expectation maximization (EM) algorithm (2017).